Thinking-while-Generating:

Thinking-while-Generating:

Thinking-while-Generating:

Interleaving Textual Reasoning throughout Visual Generation

Thinking-while-Generating:

Interleaving Textual Reasoning throughout Visual Generation

Thinking-while-Generating:

Thinking-while-Generating:

Abstract

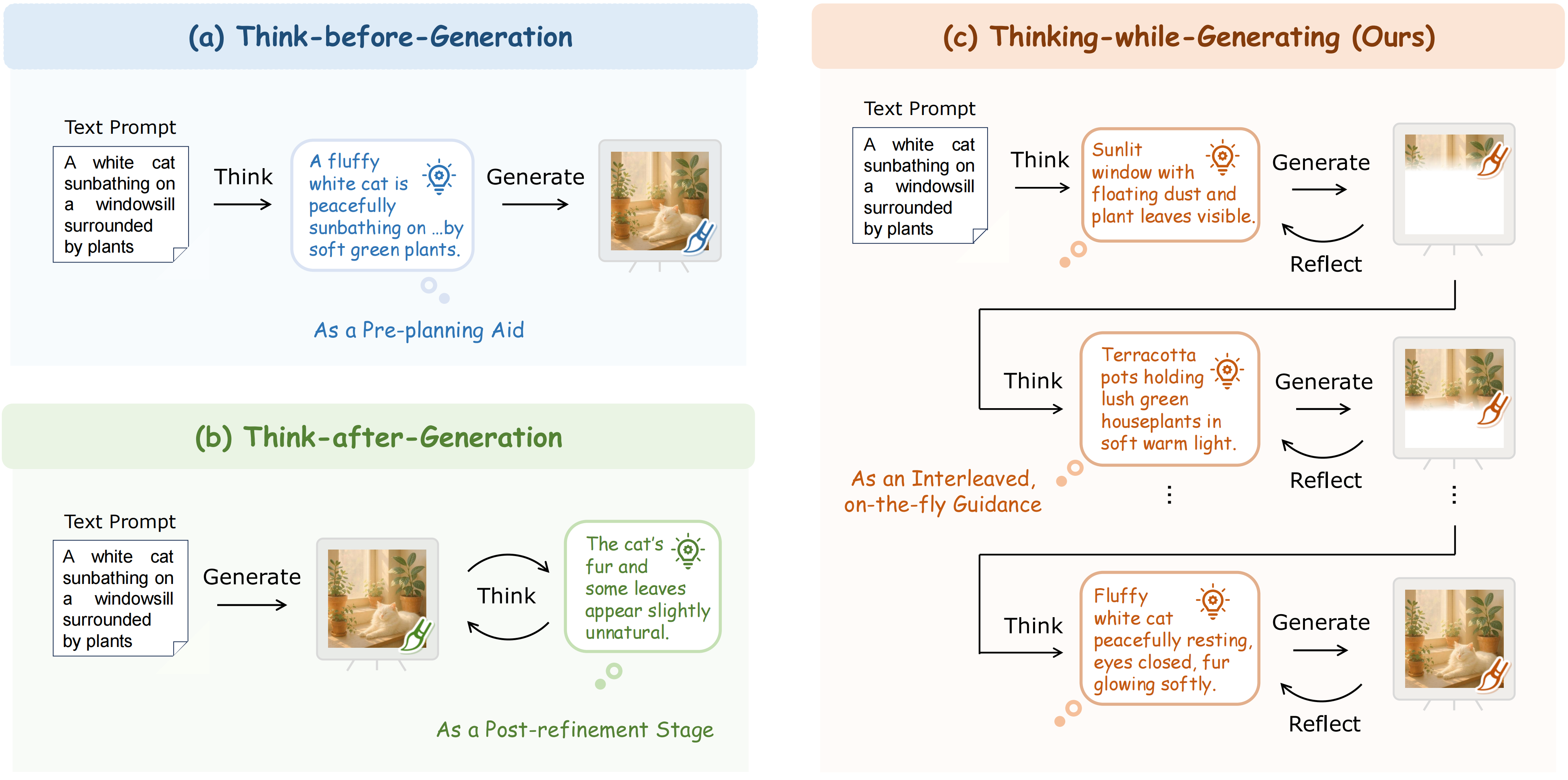

Recent advances in visual generation have increasingly explored the integration of reasoning capabilities. They incorporate textual reasoning, i.e., think, either before (as pre-planning) or after (as post-refinement) the generation process, yet they lack on-the-fly multimodal interaction during the generation itself.

In this preliminary study, we introduce Thinking-while-Generating (TwiG), the first interleaved framework that enables co-evolving textual reasoning throughout the visual generation process. As visual content is progressively generating, textual reasoning is interleaved to both guide upcoming local regions and reflect on previously synthesized ones. This dynamic interplay produces more context-aware and semantically rich visual outputs.

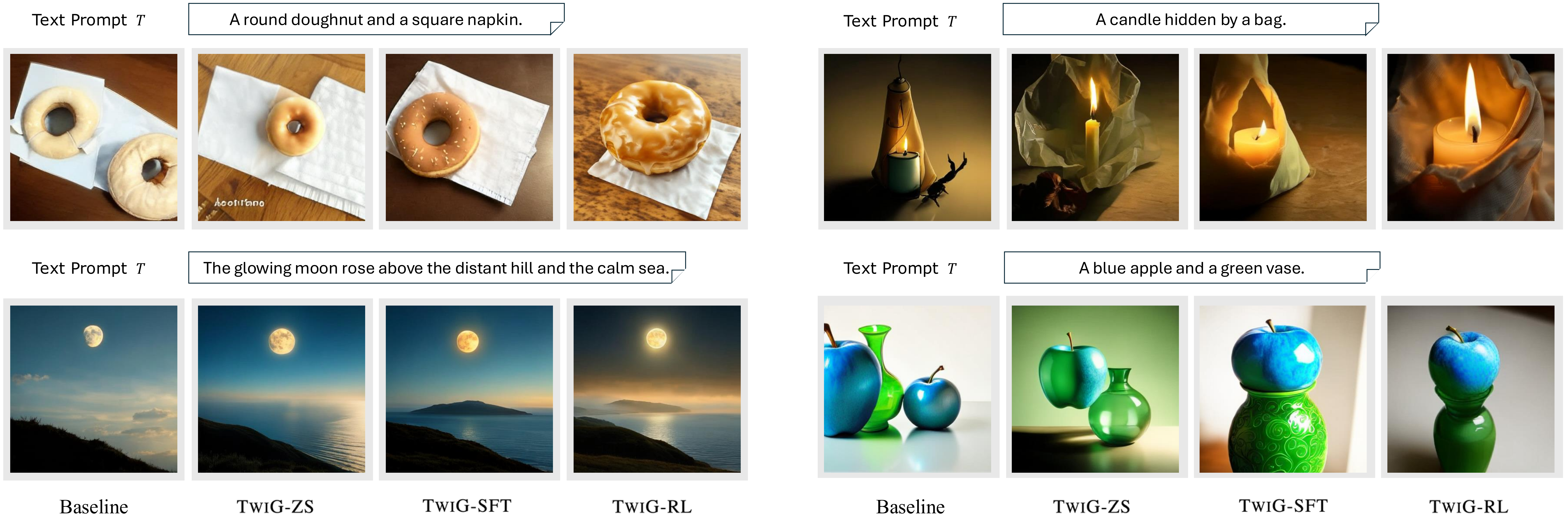

To unveil the potential of this framework, we investigate three candidate strategies: zero-shot prompting, supervised fine-tuning (SFT) on our curated TwiG-50K dataset, and reinforcement learning (RL) via a customized TwiG-GRPO strategy.

(a) Think-before-Generation injects a pre-planning thought prior to synthesis, limiting fine-grained control.

(b) Think-after-Generation verifies and revises the image only after completion, lacking timely adjustment.

(c) Our Thinking-while-Generating interleaves thoughts and reflections throughout synthesis for on-the-fly guidance.

Framework

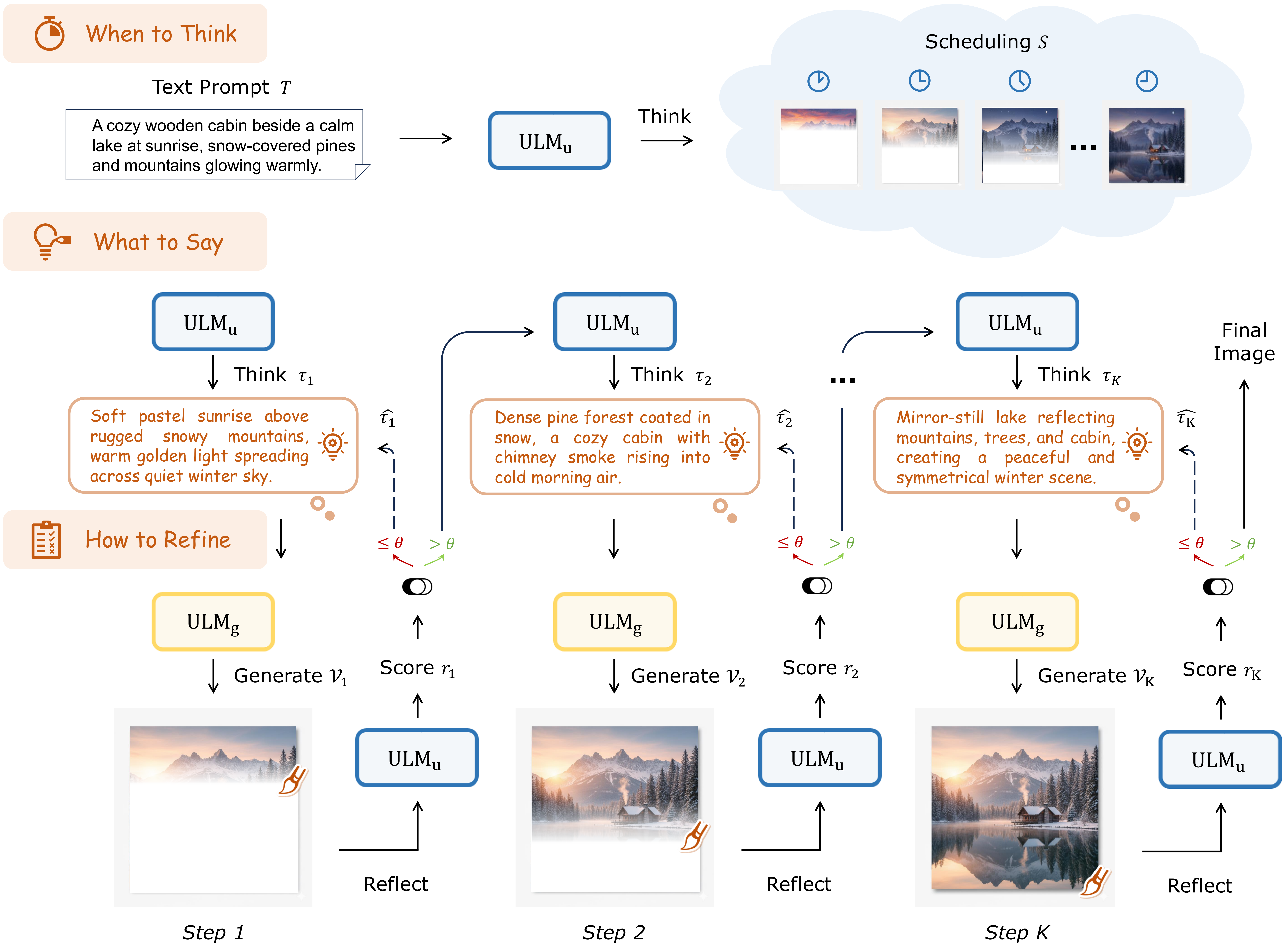

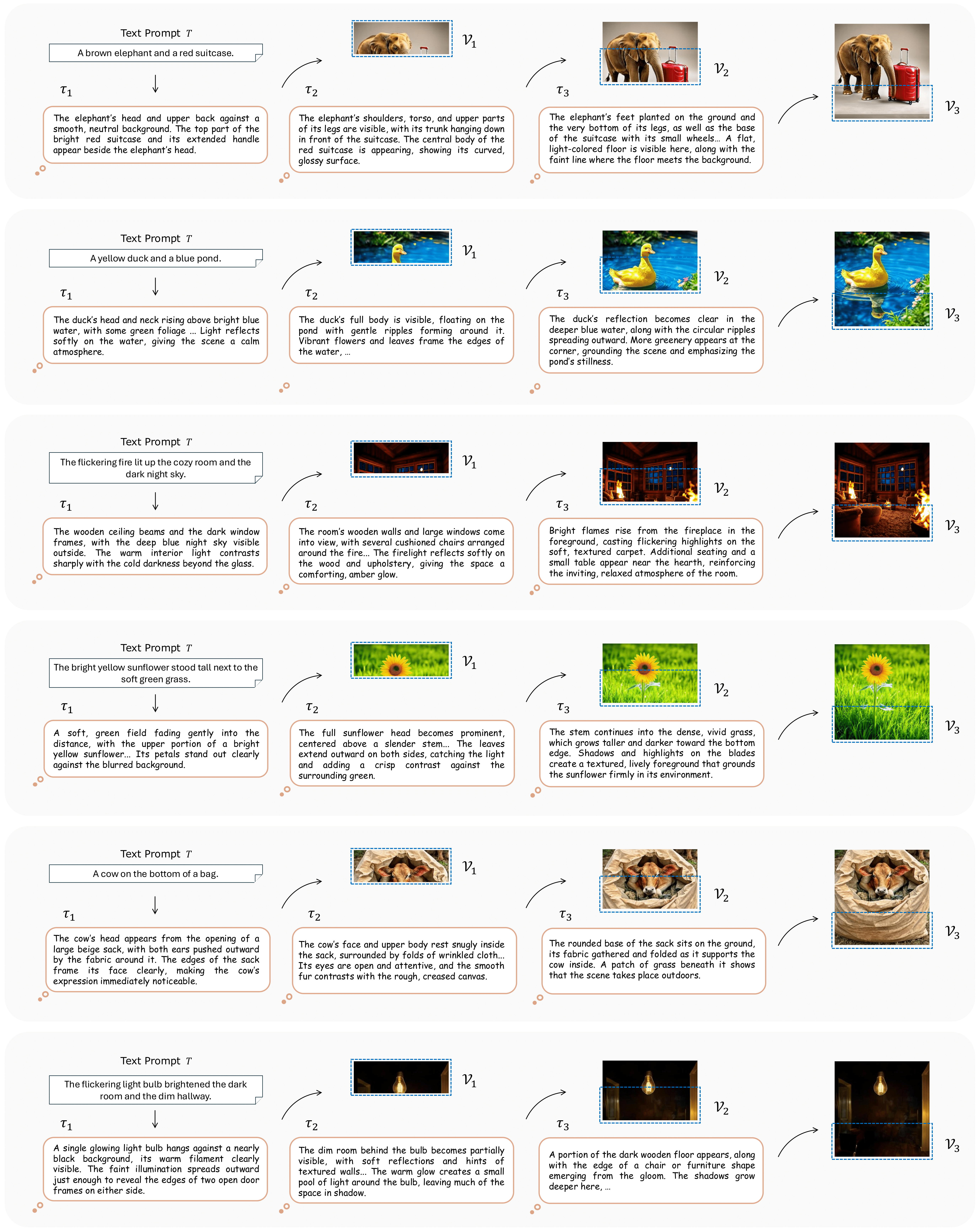

When to Think

The model determines an interleaved reasoning schedule $\mathcal{S}$ to decouple the generation process into controllable sub-tasks.

$$ \mathcal{S} = \mathrm{ULM}_{u}(T) $$

What to Say

At each step $k$, a textual thought $\tau_k$ is generated to guide the local visual region $\mathcal{V}_k$, conditioned on all previous context.

$$ \tau_k = \mathrm{ULM}_{u}(T, \{\tau_j\}_{j \lt k}, \{\mathcal{V}_j\}_{j \lt k}) $$

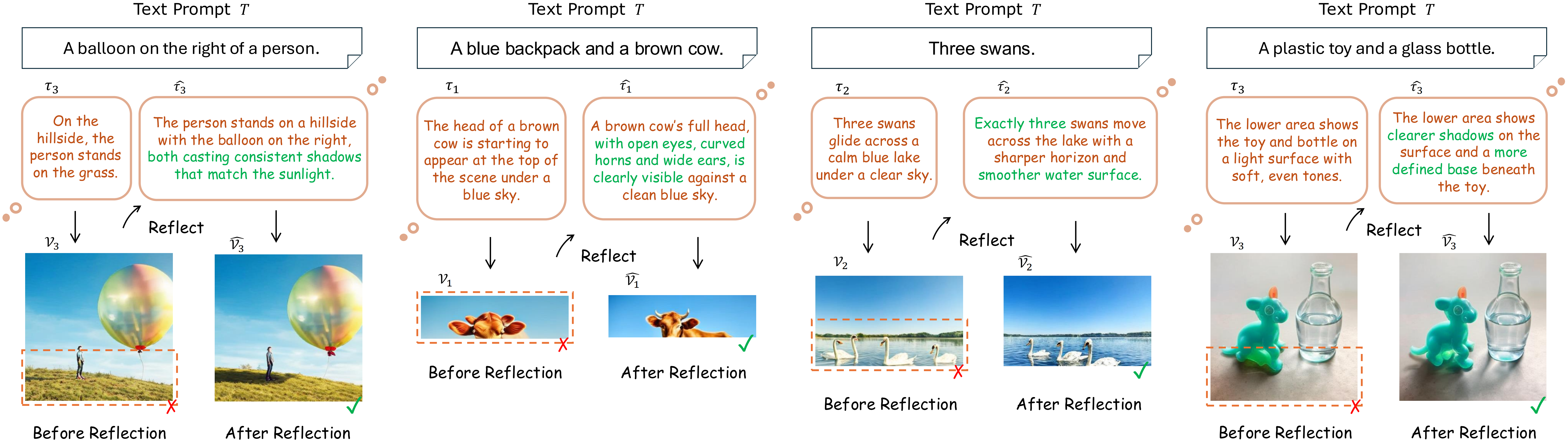

How to Refine

Before the next step, a critique $ c_k = (r_k, \hat{\tau}_{k}) $ is generated, where $r_k$ is a critic score for region $\mathcal{V}_k$ and $\hat{\tau}_{k}$ is a revised caption. If the score is low, a local reflection is triggered to refine the region $\hat{\mathcal{V}}_k$.

$$ c_k = \mathrm{ULM}_{u}(T, \{\tau_j\}_{j \leq k}, \{\mathcal{V}_j\}_{j \leq k}) $$

Visualizations

BibTeX

@article{guo2026thinking,

title={Thinking-while-Generating: Interleaving Textual Reasoning throughout Visual Generation},

author={Guo, Ziyu and Zhang, Renrui and Li, Hongyu and Zhang, Manyuan and Chen, Xinyan and Wang, Sifan and Feng, Yan and Pei, Peng and Heng, Pheng-Ann},

journal={arXiv:2511.16671},

year={2025}

}